I don't get the Strong Law of Large Numbers

I’ve been reading the article An Elementary Proof of the Strong Law of Large Numbers since it’s closely related to a result that I used in my RNN theory. I thought, once I understand it, I can refine my proof. However, I don’t understand it. Yet :)

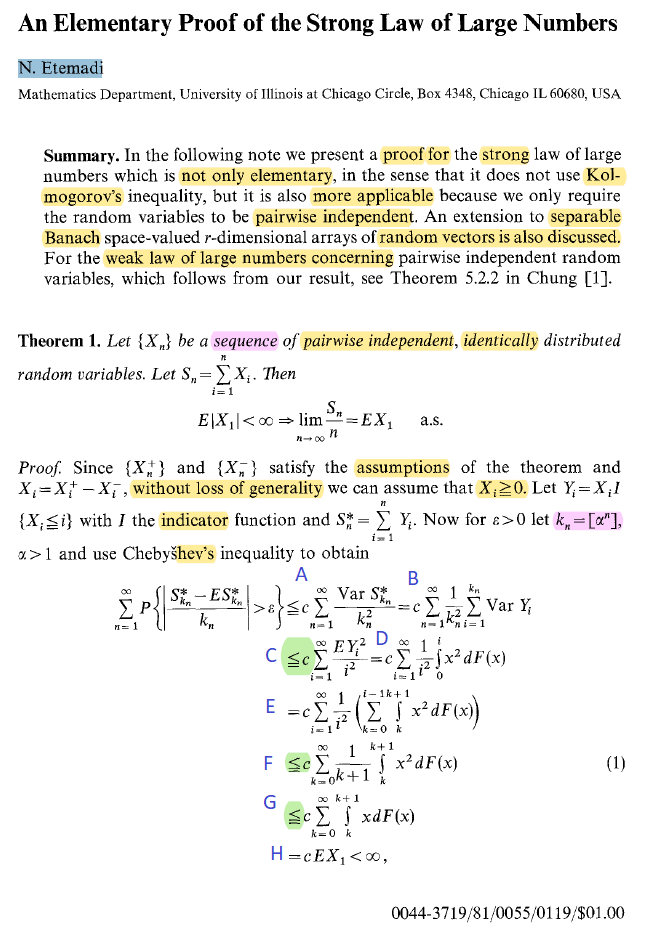

As I understand more I will update this post. For now, I will just write down my thoughts. This is the first page, and the blue letters indicate the inequalities I will prove in more detail.

So, A. is simply Chebyshev’s inequality, B only needs to assume indipendence, and C is the first part that I found tricky. Possibly obvious for a mathematician but not for me. First I wasn’t sure about how I could change the summation order, so, this is what I did

\[\begin{align} \sum_{j=1}^{\infty}\sum_{i=1}^{\alpha^j} f(i)g(j) =&\sum_{j=1}^{\infty}\sum_{i=1}^{\infty} f(i)g(j)H(\alpha^j \geq i )\\ =&\sum_{i=1}^{\infty}\sum_{j=1}^{\infty} f(i)g(j)H(\alpha^j \geq i )\\ =&\sum_{i=1}^{\infty}\sum_{j=1}^{\infty} f(i)g(j)H(j \geq \log_\alpha i )\\ =&\sum_{i=1}^{\infty}\sum_{j=\log_\alpha i}^{\infty} f(i)g(j)\\ \end{align}\]where $H$ is the Heaviside step function. Then I apply it to the sum in C, and I get

\[\begin{align} \sum_{n=1}^{\infty}\frac{1}{\alpha^{2n}}\sum_{i=1}^{\alpha^n}f(i)=& \sum_{i=1}^{\infty}f(i)\sum_{n=\log_\alpha i}^{\infty}\frac{1}{\alpha^{2n}}\\ =& \sum_{i=1}^{\infty}f(i)\Big(\sum_{n=1}^{\infty}\frac{1}{\alpha^{2n}}-\sum_{n=1}^{\log_\alpha i - 1}\frac{1}{\alpha^{2n}}\Big)\\ =& \sum_{i=1}^{\infty}f(i)\Big(\frac{1}{1-r}-\frac{1-r^{\log_\alpha i}}{1-r}\Big) && \text{with } r=\alpha^{-2}\\ =& \sum_{i=1}^{\infty}f(i)\frac{r^{\log_\alpha i}}{1-r} \\ =& \sum_{i=1}^{\infty}\frac{1}{i^2}f(i)\frac{1}{1-r} \\ =& \sum_{i=1}^{\infty}\frac{1}{i^2}f(i)\frac{\alpha^2}{\alpha^2-1} \\ =& \frac{\alpha^2}{\alpha^2-1}\sum_{i=1}^{\infty}\frac{1}{i^2}f(i) \\ \end{align}\]Pretty cool eh? There might be some mistakes, but I think easy to fix. Essentially I used classic geometric series results that you can find on Wikipedia. Also, notice that all the constants that will appear, will hide within $c$ in the paper. Also you need that $Var X \leq {\rm I\kern-.3em E} X^2$, which is always true given that $Var X = {\rm I\kern-.3em E} X^2 - ({\rm I\kern-.3em E} X)^2$. D only requires the definition of probability distribution and its cumulative $f(x)dx = dF(x)$, lower bound of integration is zero because we are dealing with positive random variables, and the upper bound is $i$ because of the indicator function in the definition of $Y_i$. E only splits the integration domain into chunks to be able to use the trick that follows. F a similar double sum as before

\[\begin{align} \sum_{i=1}^{\infty}\frac{1}{i^2}\sum_{k=0}^{i-1}f(k)=& \sum_{k=0}^{\infty}f(i)\sum_{i=k+1}^{\infty}\frac{1}{i^2}\\ \leq& \sum_{k=0}^{\infty}f(i)\sum_{i=k+1}^{\infty}\frac{1}{i}\\ =& \sum_{k=0}^{\infty}f(i)\Big(\frac{\pi^2}{6}-\sum_{i=0}^{k+1}\frac{1}{i}\Big)\\ =& \frac{\pi^2}{6}\sum_{k=0}^{\infty}f(i)\Big(1-\frac{2k}{2k+1}\frac{2k-1}{2k+1}\Big)\\ =& \frac{\pi^2}{6}\sum_{k=0}^{\infty}f(i)\frac{(2k+1)^2 -4k^2+2k}{(2k+1)^2}\\ =& \frac{\pi^2}{6}\sum_{k=0}^{\infty}f(i)\frac{6k+1}{(2k+1)^2}\\ \leq& \frac{\pi^2}{6}\sum_{k=0}^{\infty}f(i)\frac{6k+3}{(2k+1)^2}\\ =& \frac{\pi^2}{2}\sum_{k=0}^{\infty}\frac{1}{2k+1}f(i)\\ \leq& \frac{\pi^2}{2}\sum_{k=0}^{\infty}\frac{1}{k+1}f(i)\\ \end{align}\]where I used again the double summation permutation trick used before, and instead of the geometric sum trick, I used some bounds on the Basel problem that can be found on Wikipedia. The last inequality follows the simple fact that $2k+1 \geq k+1$. G follows from the fact that within the integration limits, the largest value of $x$ is $k+1$, so

\[\begin{align} \frac{1}{k+1} \int_k^{k+1}x^2dF(x)\leq& \frac{1}{k+1} \int_k^{k+1} (k+1)xdF(x)\\ =& \int_k^{k+1} xdF(x)\\ \end{align}\]and H follows from the definition of expectation. I understand actually up to equation 6 in this article, but I want to finish other stuff so, enough for today!

Soon more. Enjoy!